We detect and decode individual units in transformer MLPs that convert visual information into semantically related text. Joint visual and language supervision is not required for the emergence of multimodal neurons: we find these units in the case where only a single linear layer augments a text-only transformer with vision, using a frozen vision-only encoder.

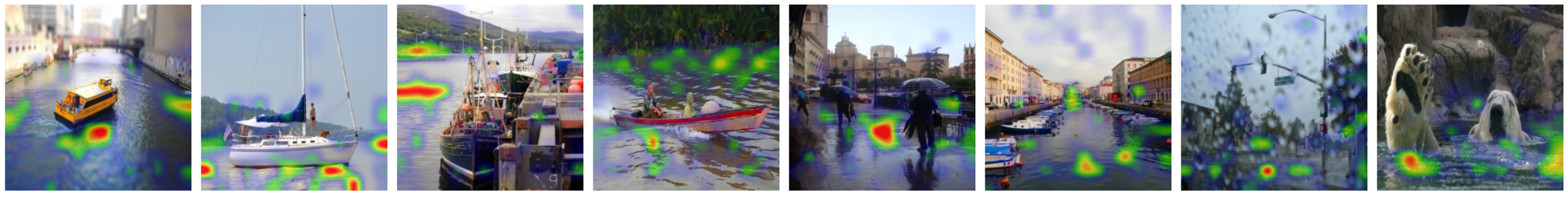

For example, MLP unit 9058 in Layer 12 of GPT-J injects language related to swimming, swim, fishes, water, Aqua into the model's next token prediction, and is maximally active on images and regions containing water, across a diversity of visual scenes.

Language models demonstrate remarkable capacity to generalize representations learned in one modality to downstream tasks in other modalities. Can we trace this ability to individual neurons? We study the case where a frozen text transformer is augmented with vision using a self-supervised visual encoder and a single linear adapter layer learned on an image-to-text task. Outputs of the adapter are not immediately decodable into language describing image content; instead, we find that translation between modalities occurs deeper within the transformer. We introduce a procedure for identifying "multimodal neurons" that convert visual representations into corresponding text, and decoding the concepts they inject into the model’s residual stream. In a series of experiments, we show that multimodal neurons operate on specific visual concepts across inputs, and have a systematic causal effect on image captioning.

@inproceedings{schwettmann2023multimodal,

title={Multlimodal Neurons in Pretrained Text-Only Transformers},

author={Schwettmann, Sarah and Chowdhury, Neil and Klein, Samuel and Bau, David and Torralba, Antonio},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={2862--2867},

year={2023}

}